Data Warehouse

Overview

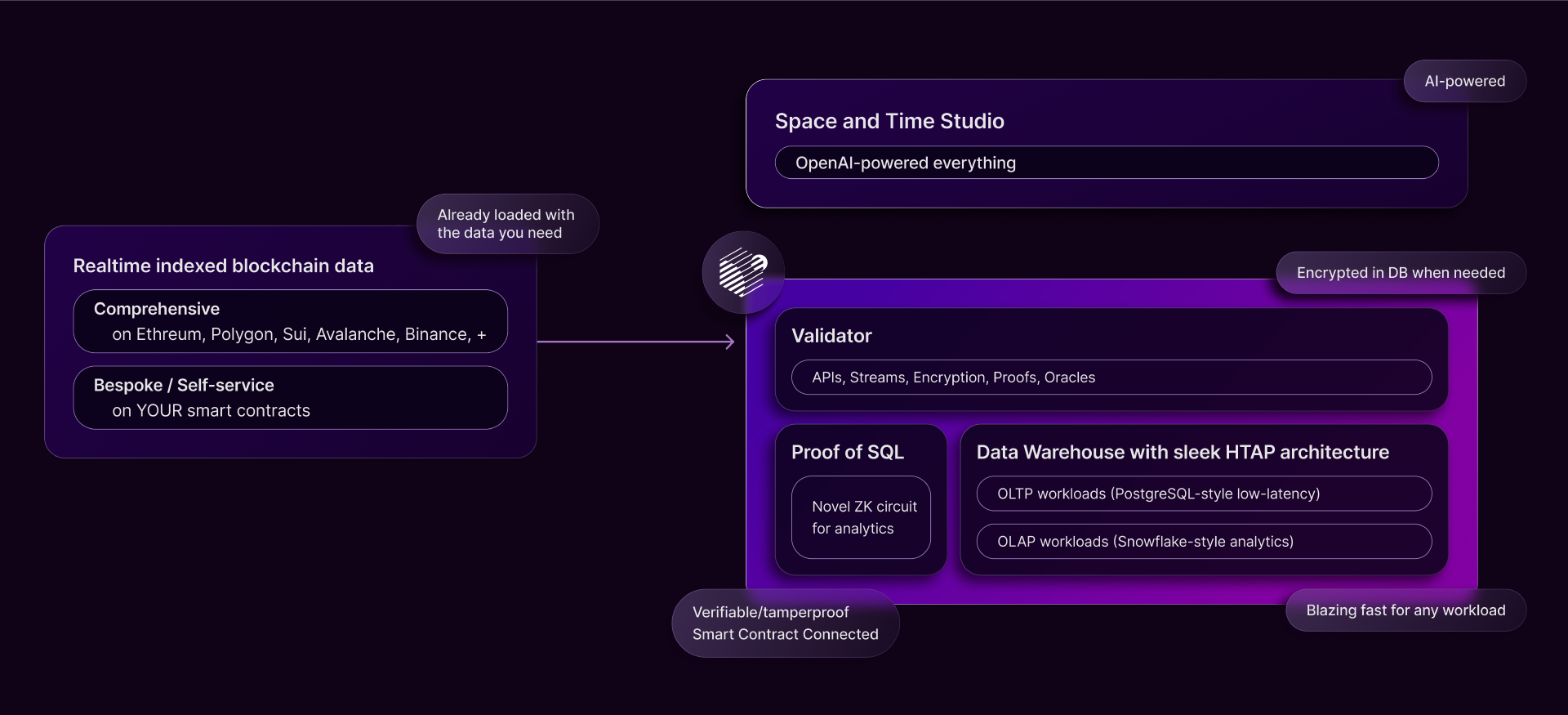

The Data Warehouse is the backbone of the Space and Time network. It’s a decentralized, Web3-native hybrid transactional/analytic processing (HTAP) engine that enables trustlessness, scalability, and lighting-fast performance for any data workload.

Data warehouse operations

The Space and Time Data Warehouse is composed of multiple clusters operated in a permissionless manner by a network of node operators. The various data warehouse clusters across the Space and Time data fabric are the workhorses of the Space and Time system. They are responsible for performing the five major operations of data:

- Data Ingestion - saving data from external sources

- Data Transport - warehouse to warehouse data transfer

- Data Storage - persistent save of data with view to any point in time

- Data Transformation - data cleaning, aggregations, multi-source data joins

- Data Serving- easy and performant access to data, intelligent caching, creation of data APIs

In order to perform all of these operations within the context of a single warehouse node, Space and Time employs an incredibly flexible warehousing solution: HTAP.

The necessity for HTAP

Hybrid transactional/analytic processing data stores are in vogue, and for good reason. They promise, with good workload management, a system which can adapt to any workload which they are tasked with processing. This is exactly what Space and Time requires as an end-to-end data platform. Warehouse nodes must be generic enough to serve high throughput ingestion one minute, and then in the next aggregate over the Terabytes just ingested. For this, you need an HTAP system.

The fabric of the system

Space and Time’s HTAP system is impressive as a standalone, but plugging it into the Validator vastly extends its capabilities. Data can be freely passed around within the context of the Space and Time platform, which to those who are familiar with advancements in the Web2 space will sound very similar to a data fabric:

…an architecture and set of data services that…standardizes data management practices and practicalities across cloud, on premises, and edge devices.

Space and Time as a platform is the world’s first decentralized data fabric, and it unlocks a powerful but under-served market: data sharing. Within the Space and Time platform, companies can freely share data, and they can transact on that data sharing using smart contracts. Furthermore, datasets can be monetized in an aggregated fashion, without giving the consumer access to raw data, through Proof of SQL. The data consumer can trust the aggregation is accurate without seeing the data themselves, so data providers no longer have to be data consumers. It is for this reason the combination of Proof of SQL and a data fabric architecture has the potential to democratize data operations, as anybody can contribute their part in ingesting, transforming, and serving a dataset.

Updated over 1 year ago